How to: Azure Open AI Instead of ChatGPT integration (on-premise)

Hi,

Some time ago i reached out to Servicedesk Plus support to ask if it was possible to use Azure Open AI instead of ChatGPT for the ChatGPT integration. They would look into it, but i feared it might take a while for them to develop. So i started making my own integration and got it working. Sharing this information for anyone else that might find it useful.

Purpose:

The purpose of the integration is to use the existing ChatGPT integration, but send queries to Azure OpenAI instead of ChatGPT. This is useful in cases where ChatGPT is not used in the organization, but Azure OpenAI is possible.

Requirements:

- Azure Subscription

- Azure Function

- Azure OpenAi deployment

- Nginx Reverse Proxy

- Visual Studio Code

I will not be going into depth on how to set up the different services. I will however provide guidance on how to use the scripts and the basic configurations.

Azure OpenAI

In Azure OpenAI, deploy a new model. I suggest using gpt-35-turbo for faster responses. Note down the following information from the deployment

(Below are only examples)

Deployment Name: gpt-35-turbo

Target URI: https://DEPLOYMENTNAME.openai.azure.com/openai/deployments/gpt-35-turbo/chat/completions?api-version=2025-01-01-preview

Key: 5f237b736cd54b84dw379998f2i82c6

You do not need to do anything further in this deployment.

Azure Function created with Visual Studio

In Visual studio install the following extentions:

- Azure Functions

- Azure Developer CLI

- Azure Tools

- Python

- Pylance

- Microsoft Entra External ID

(not all of these are required, but nice to have)

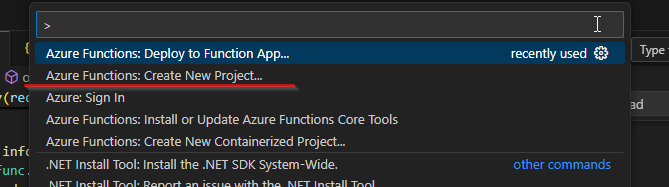

1. Press CTRL+SHIFT+P and choose to create a new project:

2. For the runtime stack choose "Python"

3. For the trigger choose "HTTP trigger" and name it "http_trigger"

4. For the authorization choose "Function"

You will get a new project that looks like this

You will get a new project that looks like this

5. Open up "function_app.py" and paste the script attached to this post of the same name. You do not need to make any changes to the script.

6. Open up "host.json" and paste the following:

{

"version": "2.0",

"extensions": {

"http": {

"routePrefix": ""

}

}

}

7. Open requirements and paste the following

azure-functions

requests

8. In visual studio in the left panel, choose the Azure Icon and make sure that you are signed in.

9. Go back to your function_app.py and press ctrl+shift+p and deploy the function app

9. Go back to your function_app.py and press ctrl+shift+p and deploy the function app

10. Go to the Azure function in your Azure portal and find "Environment Variables" under "Settings". Make sure that you add the settings:

- AZURE_OPENAI_DEPLOYMENT_NAME

- AZURE_OPENAI_ENDPOINT

- AZURE_OPENAI_KEY

- EXPECTED_SDP_API_KEY

The values of these should be whatever you retrieved from your Azure OpenAI deployment earlier. For EXPECTED_SDP_API_KEY, you can put whatever you want. I would suggest that you use the same as the azure open ai deployment key. This value is what you will put as the API key in ServiceDesk plus in the chatgpt integration.

11. Restart the function app and it should now be fully configured

12. Go to "Keys" under "Functions" and copy the default key. It will be used in the Nginx config.

13. Go to Overview and copy the default domain for the azure function.

Nginx configuration:

You need to configure an Nginx reverse proxy that uses the Azure Function Key. Create a new configuration here: /etc/nginx/sites-enabled with the name api.openai.com.conf and paste the following configuration

- server {

- listen 443 ssl;

- server_name api.openai.com;

- ssl_certificate /etc/nginx/api-openai-selfsigned.crt;

- ssl_certificate_key /etc/nginx/api-openai-selfsigned.key;

- ssl_stapling off;

- ssl_stapling_verify off;

- location / {

- proxy_ssl_server_name on;

- proxy_ssl_verify off;

- proxy_set_header Host sdp-openai-proxy.azurewebsites.net;

- proxy_set_header X-Real-IP $remote_addr;

- # Explicitly forward original URI and function key correctly:

- proxy_pass https://{AZURE FUNCTION URL}/api$uri?code={YOUR FUNCTION KEY};

- }

- }

Replace {AZURE FUNCTION URL} with the default domain of your Azure Function and {YOUR FUNCTION KEY}

with the function key from your Azure function For example

- proxy_pass https://servicedesk-openai-proxy.azurewebsites.net/api$uri?code=GkkB53PUzrrvm_IRorNdkwoikdwakpE0loKOs8b-dkjwiokwuNUqeQQ==;

Since Servicedesk checks for SSL verifications, we will need to create a self signed certificate. To do so, run the following commands on your nginx server

- # Create a private key

- openssl genrsa -out /etc/nginx/api-openai-selfsigned.key 2048

- # Create a certificate signing request (CSR)

- openssl req -new -key /etc/nginx/api-openai-selfsigned.key -out /etc/nginx/api-openai.csr

- # Self-sign the certificate (valid for 10 years)

- openssl x509 -req -days 3650 -in /etc/nginx/api-openai.csr -signkey /etc/nginx/api-openai-selfsigned.key -out /etc/nginx/api-openai-selfsigned.crt

Copy the key to your servicedesk plus server and run the following commands in a CMD as administator:

- cd "C:\Program Files\ManageEngine\ServiceDesk\jre\bin"

- keytool -import -trustcacerts -alias api-openai -file "C:\temp\api-openai-selfsigned.crt" -keystore "C:\Program Files\ManageEngine\ServiceDesk\jre\lib\security\cacerts" -storepass changeit

Restart the servicedesk server and reload nginx

Finally update the hostfile on your servicedesk plus server pointing "api.openai.com" to your nginx reverse proxy server.

You should now be able to enable the ChatGPT integration in Servicedesk Plus. For Organization Name and Organization ID, put whatever values you want. For the API key, but the value you specified as the EXPECTED_SDP_API_KEY value in your Azure function

You should now be able to enable the ChatGPT integration in Servicedesk Plus. For Organization Name and Organization ID, put whatever values you want. For the API key, but the value you specified as the EXPECTED_SDP_API_KEY value in your Azure function

Now requests that Servicedesk is thinking it is sending to api.openai.com, are actually sent to your Nginx reverse proxy, that rewrites the URl and forwards the request to your Azure Function. The Azure function rewrite the message and the response to fit the right format that Servicedesk Plus can use, and it should work just as if you used ChatGPT for the integration.

I might have missed a step or two and some information might not be correct. Feel free to reach out to me for assistance in case it does not work for you. I might be able to help, but there is no gurantee. It might also not be the best way to do this. But it works.